Rod Rinkus Research Statement 2017

NOTE: This research statement is more slanted towards the computational aspects of my research, i.e., machine learning (ML) per se: download 2018 Research Statement for more emphasis on neurobiology.

SDR, in which each item (percept, concept) is represented by a small subset of binary units chosen from a much larger field of units, where subsets can generally intersect, and intersection size can represent item similarity, has received relatively little attention in recent decades compared to mainstream methods, e.g., Backprop, MLP, ConvNets, Restricted Boltzmann, GPMs, etc. However, SDR, particularly as implemented in Sparsey, provides massive, hitherto still largely misapprehended, computational advantages for machine learning (ML), artificial general intelligence (AGI), etc. Most importantly, as my 1996 thesis and subsequent publications (2010 and 2014) have shown, representing information using SDR admits an algorithm that can both store (learn) a new item and retrieve the best-matching item (recognition, inference) in fixed time. If one can statistically guarantee that the SDR coding field’s storage capacity is large enough to handle the expected informational load of the domain / task (I elaborate on this caveat in a final section), then this fixed time performance effectively becomes constant [“O(1)”] time complexity for both storage and best-match retrieval, a capability which, to my knowledge, has not been demonstrated for any other computational method, including locality-sensitive / semantic hashing, tree-based methods, DL methods [including DL augmented by reinforcement learning, external memory, e.g., Neural Turing Machine (NTM), or an adversarial learning protocol (e.g., generative adversarial nets (GANs)], etc. Moreover, Sparsey possesses it for sequences, as well as purely spatial patterns.

While DL approaches are indeed making impressive strides on hard problems, e.g., AlphaGo, ImageNet, speech recognition, they do not possess O(1) learning. They are founded upon slow, incremental, gradient- and MCMC-based learning methods, generally requiring many epochs. It is readily acknowledged, including by DL’s leading figures, that without using massive power, e.g., using GPUs, DL learning would have unacceptably long learning times. In fact, most recently, Hinton has stated (in Axios interview) that he no longer sees backpropagation as essential to intelligence and is calling for a paradigm shift. Moreover, ConvNet-like solutions in particular depend on tied / shared parameters (i.e., to compute average gradient, ultimately to learn a single basis for all units in a given feature map), but this entails massive data movement (e.g., accumulating gradient information at a central locale for averaging) and massive data movement means massive power consumption. Thus, DL has a looming problem regarding scaling to truly massive datasets and truly complex queries over such data: learning either takes too much time or too much power. In fact, I think that benchmark sites such as Kaggle should now publish not just training time, but total power used during training as well, i.e., a combined time-power metric by which to judge models.

I also want to emphasize that the recent breakthroughs fueling the DL renaissance, e.g., drop-out, RELU, reinforcement learning, GANs, external memory, are largely principles that are orthogonal to whether gradient / MCMC-type or simpler, local, non-optimization-centric learning methods are used. Thus, these meta-methods, especially RL and GANs, can be readily applied to Sparsey as well. Beyond Sparsey’s time complexity advantage, which eventually overrides all other considerations as we scale to truly massive data sets, Sparsey’s sub-symbolic representation and learning algorithm constitute a radically different conception for how the domain’s similarity / statistical structure (of all orders, not simply pairwise), i.e., latent variables, are efficiently learned, i.e., a radically new conception of how probabilities are represented and manipulated in the brain (Rinkus 2017). And, since Sparsey is a recurrent model designed from inception to handle spatiotemporal (sequence) data, Sparsey also constitutes a radically new implementation of GPMs. Sparsey falls into the instance/exemplar-based class of statistical models in which what is explicitly stored (learned), on the basis of single trials, are the memory traces of the individual exemplars experienced, i.e., episodic memory traces. Because a) individual input exemplars are represented as SDRs, which are stored in superposition, and b) Sparsey’s learning algorithm preserves (spatiotemporal) similarity from the input space into the code (SDR) space, the higher-order similarity (statistical) structure over the exemplars, i.e., the generative model or semantic memory, emerges in the pattern of intersections over the episodic traces. Thus, the generative model, which drives classification and probabilistic inference, emerges automatically as a computationally free by-product of the explicit storage of episodic memory traces in superposition. This differs massively from the recent approaches to integrating episodic memory to DL (e.g. NTM) in which the episodic store is physically separate from the DL network, and moreover, the individual episodic traces are in general physically disjoint (i.e., stored localistically). Thus, in such models, not only is the learning of the generative model not accomplished computationally for free, but the movement of information back and forth between the physically separate generative model and episodic store entails non-trivial power consumption.

As noted above, Sparsey does single-trial, on-line learning of spatiotemporal (sequential) input patterns, embedding chains of SDRs (essentially Hebbian phase sequences of “cell assemblies”) as episodic memory traces of the inputs. However, it is also essential that Sparsey is hierarchical, allowing arbitrarily many levels, where each level consists of multiple coding fields (proposed as analogs of cortical macrocolumns, or “macs”) and the time constant (persistence) of the codes (SDRs) increases (typically, doubles) with level. Thus, more precisely, Sparsey embeds hierarchical, spatiotemporal memory traces using both chaining within levels and chunking between levels, e.g., a single code in a level J mac can become associatively linked with two successive codes in a level J-1 mac, i.e., a form of compression (see page, page, and page for more details).

While informational items are represented sub-symbolically as SDRs, I am deeply interested in explaining how progressively higher-level informational structures / symbols, e.g., phonemes, syllables, morphemes, words, etc., and more generally, recursive part-whole compositions, automatically emerge from the more or less continuous, multimodal input stream. The fact that the generalities that automatically emerge in Sparsey are represented by patterns of intersections over SDRs (more generally, over chains of SDRs, and more generally still, over hierarchical chains of SDRs) of particular experienced inputs, suggests that Sparsey may have greater power for modeling / explaining the often quite idiosyncratic nature of classes / rules / schemas learned and used by people, e.g., in language learning, cf. overgeneralization in children.

From 2010-2016, Neurithmic Systems focused on further developing Sparsey's underlying architecture, algorithms, and theory and applying it to spatiotemporal (e.g., Weizmann, KTH video benchmarks) and spatial data (e.g., MNIST). Sparsey has not yet reached SOA classification accuracy, though it is close and I expect to attain SOA accuracy in the near future: Sparsey has a very large parameter space, which I am in the process of searching for optimal regimes. In any case, due to its extreme algorithmic efficiency, Sparsey learns these data sets in just minutes, without any machine parallelism (MP). Thus, even without any other changes, porting Sparsey onto a GPU framework would likely immediately reduce its learning times by ~100x, making it much faster than mainstream SOA ML methods. However, other forms of MP, e.g., ASIC, would likely provide much greater efficiency and speed, as demonstrated by Prof. Paul Franzon's (NCSU) research (pub1 and pub2). In fact, Sparsey is an ideal candidate for implementing in a Memristor or PCM crossbar framework, which would yield far greater speedup.

I believe Sparsey constitutes a paradigm shift in knowledge representation, and consequently, in representation learning, knowledge acquisition, automated knowledge base construction, inference engines, statistical relational AI, etc. I’ve worked for many years on this technology, focusing more on developing core competencies / functionalities that would apply generically to virtually all ML / AGI applications, particularly those involving spatiotemporal / sequential domains, e.g., video event recognition, speech recognition, NLP, text mining, biosequence mining. In the process, I (sometimes with a team) have built an extensive software implementation incorporating many principles / mechanisms some of which were sketched herein and some of which are patented or patent-pending. Yet, I have envisioned an expansive program of research, including many specific sub-projects, that could easily occupy many years, but that will also yield essential AGI technology and products beginning immediately, i.e., within a few months, e.g., attaining SOA accuracy on various ML benchmarks but with orders of magnitude faster learning.

Additional section elaborating on Sparsey’s computational efficiency

I stated earlier that Sparsey’s core algorithm (for learning and inference) runs in fixed time, i.e., the number of operations needed either to store a new item or retrieve the best-matching item remains fixed as the number of stored items increases. A Sparsey mac (SDR coding field) is an associative memory in which items (memory traces) are stored in superposition. Thus, as the number of items stored grows, interference (crosstalk) will increase, reducing the average retrieval accuracy over the set of stored items. We define a mac's storage capacity to be the number of items that can be safely stored, i.e., stored so that average retrieval accuracy over all stored items exceeds a defined threshold, e.g., 97%. My 1996 Thesis results showed that a mac's storage capacity scales well, i.e., linearly in the weights and super-linearly in the units. Nevertheless, the numbers of units and weights needed to safely store N items grow with N. Since the number of operations needed to store or retrieve an item involves an iteration over the weights to the mac (i.e., each cell must sum its inputs), the best we can say for an individual mac is that it has fixed run time but not that it truly has O(1) time complexity.

However, if we can construct an overall system—specifically, a hierarchy of macs—in such a way that, throughout a lifetime operating and in a naturalistic domain (i.e., a domain having 1/f statistics, i.e., a domain whose objects/events are strongly recursively compositional):

- no mac ever exceeds its capacity

- the rate at which macs approach capacity, i.e., the rate at which new inputs occur, decreases quickly, e.g., exponentially, with level; and

- processing (learning / retrieving) an input entails a single iteration over all the macs, e.g., a single bottom-up, level-by-level processing pass over all the macs;

then the overall system can remain able to store new inputs without losing old knowledge and while retaining fixed time storage and best-match retrieval over potentially very long lifetimes. I refer to such a system as effectively possessing O(1) time complexity storage and best-match retrieval. Point 3 is needed because if the run time of each mac is fixed, then the sum of the run times of all macs is also fixed. Indeed, Sparsey's algorithm satisfies Point 3. Moreover, In practice, as can be seen in many of this Website's animations, only a small fraction of the macs activate, and thus contribute to run time, on each time step. [Furthermore, it is not necessary that a processing pass be limited to only a single iteration over all macs (though that is the case for the current version of Sparsey): any fixed number of iterations over the macs implies that the total processing time is fixed.]

With regard to Point 1, there are two scenarios by which a mac can avoid exceeding capacity over the lifetime of the overall model. Either: A) we can "freeze" the mac when it reaches capacity, preventing any further codes from being stored in it; or B) it can happen that the set of novel inputs generated by the naturalistic input domain is smaller than the mac's capacity. Broadly, scenario A dominates in the lower level macs and scenario B dominates at the higher levels. Part of the reason scenario B dominates in higher level macs is the freezing that is enforced in lower level macs.

First we consider the lower level macs, for concreteness, the first internal level (L1) of macs, e.g., V1. L1 macs have small receptive fields (RFs), a relatively tiny aperture onto the visual field. Sparsey assumes the input level (L0) is a array of binary pixels. Thus, an L1 mac's RF might be a 6x6-pixel array. Furthermore, we assume input video frames are preprocessed by being edge-filtered, binarized, and skeletonized. For naturalistic video, this generally yields frames containing one/few edge segments, often with quite low curvature, within such a small aperture. In addition, Sparsey implements the policy that a mac only activates if the number of active units in its RF is within a small range. Suppose that range is maximally tight, e.g., [6,6]. In that case, there are C(36,6) (about 2 million) possible input patterns for which the L1 mac will become active, i.e., activate an SDR. However, given the preprocessing, only a small fraction of those, perhaps in the tens of thousands, are likely to ever occur. But also, because of the preprocessing, the patterns that do occur are overwhelmingly likely to be edge-like. That is, salt-and-pepper noise or even patterns consisting of more than 2 or 3 small edge-like pieces, will be relatively rare: input patterns consisting of one or two edge-like pieces will be far more likely. Yet, for biologically realistic instantiations of a mac, e.g., Q=70, K=20, likely at most only a few thousand codes could be safely stored (despite the fact that the code space is formally 2070). Thus, as the eye experiences the world, it is likely that the number of unique inputs this mac experiences, will quickly exceed its capacity. Thus, in order to prevent this mac from losing memories, we will have to freeze it.

We must now consider the consequences of freezing a mac. We will refer to the set of codes stored in the mac, or rather the inputs they represent, as the mac's basis. This consideration is approached with two questions.

- Given the assumptions above, i.e., the preprocessing, the tight range of input activation required to activate the mac, and the naturalistic input domain, how good a job can the frozen basis do at representing all future inputs to the mac? More formally, we define the fidelity of a basis to be the expected intersection of the mac's input to the nearest basis element, averaged over all future inputs for which the mac activates. Given all the assumptions of this example, we expect that a basis of only a few thousand, or even a few hundred, could yield quite high fidelity, approaching 100%. We intend to conduct such an empirical analysis in the near future.

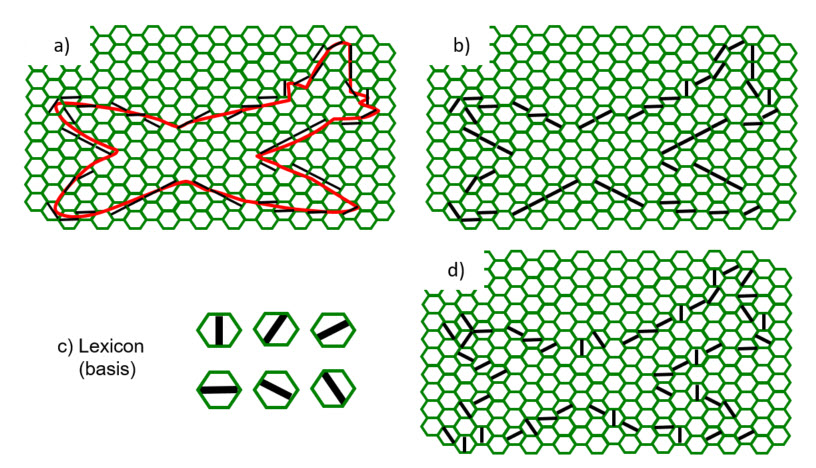

- Given that an L1 mac corresponds to only a small portion of the whole visual field and thus that its input pattern may correspond to only a small portion of an object or scene to be recognized, how high a fidelity is really required at L1? This concept is illustrated below. Broadly, the idea is simply that the object of interest here, the plane, spans many macs' RFs. The overall shape of the plane, i.e., the relative position of its wings, nose, tail, etc., is carried not by the precise features active in each RF, but by the relative positions of the RFs themselves. For example, the features in panel d have been randomly scrambled, yet the gross shape of the plane is still discernible (squint your eyes). This information, i.e., the overall pattern of active L1 macs, can, in principle, be recognized by macs at the next higher level, L2, whose RFs consist of small regions of L1 macs. Thus, to the extent that some of the meaning of an overall input image is carried by the spatial topology of the macs at a level, J, this reduces the fidelity needed by the level J macs in order for the level J+1 macs to achieve any given level of fidelity. Again, we intend to empirically analyze this principle in the near future.

Now, we consider the higher level macs. First, it is essential that any autonomous, intelligent system be able to store new information, i.e., new permanent memory traces, for its entire lifetime, cf. "lifelong learning". In practice, we will be satisfied with a statistical argument that the expected time to reach the condition where all macs comprising the overall hierarchy have reached their capacities is some large number, e.g., a human lifetime, 10 human lifetimes, etc. More precisely, we will be satisfied with a statistical argument that the rate at which new inputs are expected to occur (to macs) decreases exponentially with level. Thus, broadly, the envisioned scenario is this. As the system experiences the world, many/all of the macs at lower levels may reach capacity and be frozen rather early in the system's lifetime, but, due to the statistical argument below, many/some of the macs at higher levels will never reach capacity, and thus never be frozen, and thus be able to store new inputs throughout the expected lifetime of the system. Note, there is substantial evidence, across many/all sensority modalites, for "critical periods", i.e., the phenomenon that if a particular cortical region does not experience input, or certain classes of input, prior to some age, that region never develops the ability to recognize such inputs. I believe that the purpose of biological critical periods is precisely to serve the functionality described here, i.e., prevent too many codes from being stored in macrocolumns, and thus prevent catastrophic forgetting. The reason why biological critical periods have their particular timecourses is that over the course of evolution, the neural system has become tuned to natural statistics, i.e., to "know" about how long it should take to experience enough input to build a basis (for a mac at any particular level) with sufficient utility to guide an organism's behavior. If, for any reason, the inputs experienced by a given low-level mac, M, within its critical period do not have the range of variation that nature has "come to expect", that's too bad: the critical period still closes, i.e., learning is still shut down in M. That sounds overly severe perhaps, but the advantage is that once M's basis is frozen, the higher-level macs that receive input from M can now depend on that particular basis forever. This is needed so that that compositional concepts formed/learned at higher levels can safely become permanent, i.e., without the meanings of their constituent parts, e.g., represented by M's basis elements, changing (even though M's basis is not as rich as it might have been).

So, by what reasoning can we claim that the rate at which macs at higher levels approach capacity likely slows down exponentially with level?

First, as described above, the particular preprocessing used and the tight criteria for activating a mac already greatly constrain the set of inputs expected to be experienced and stored in a mac. Suppose that we impose that following the point of freezing, not only will no new codes ever be stored (learned) in the mac, but also that only the set of already stored codes will ever be allowed to activate in the mac. Now, suppose that the RF of a level J+1 mac is a set of level J macs. That is, the boundary of the level J+1 mac follows exactly the boundary of the set of level J macs comprising its RF. That means that following the point at which all L1 macs freeze, the set possible of inputs to a level J+1 mac that can ever possibly occur is fixed. Suppose also that a level J+1 mac only activates if the number of active level J macs in its RF is within a tight range. For concreteness, suppose an L2 mac's RF consists of 9 L1 macs and that the L2 mac will ony activate if exactly three of its nine L1 macs is active, i.e., if the number of active L1 macs is in the range [3,3]. Thus, the space of all possible inputs to the L2 mac can be partitioned into sets corresponding to C(9,3)=84 3-part compositions. Suppose that L1's critical period has elapsed (though, the freezing actually occurs on a mac-by-mac basis) and that the basis size of all the frozen L1 macs is 100. In this case, the total number of actual input patterns that the L2 mac can ever experience for the remainder of the system's life is 84x100x100x100 = 84x106.

This may seem like a large space, but we must compare it to the total number of unique L0 input patterns that were initially possible, i.e., prior to any learning at any level, within the "composite L1 RFs" corresponding to the 84 specific 3-part compositions. Let's consider just one of those 84 3-part compositions. We said above that the space of possible patterns that would cause any of the L1 macs to activate was C(36,6) (~2 million). However, due to the filtering, we speculated that only perhaps a few tens of thousand patterns, let's say 104, for concreteness, would actually ever arise in the RF of any given L1 mac. Thus, the total unique patterns for a single 3-part composition is 1012, and the total for all 84 3-part compostions is 84x1012. Thus, the effect of enforcing a critical period at L1 (i.e., shutting off learning in an L1 mac once 100 codes have been stored in it) has been to reduce the input space to the L2 mac by 6 orders of magnitude.

The above reasoning applies at every level. In general, we could quantify the reduction in input space at level J+1 post freezing of level J macs, but the broad conclusion is that the combined effect of hierarchy, compositional RFs, and critical periods, reduces the space of possible inputs to a level J mac exponentially relative to its initial size, i.e., prior to any learning. Note that all weights are zero at "birth". Further, the size of that relative reduction increases exponentially with level.

However, our ultimate goal (Point 2, near top of page) is to show that the expected rate at which new inputs occur decreases exponentially with level. If that is true, then macs at the higher levels of a model, even having only a small number of (e.g., <10) levels could have very low expected rates of occurrence of new features. Such a model would therefore have a very long expected lifetime operating in a natural world without exhausting its memory capacity. Broadly, the intution that supports this is that natural worlds have 1/f statistics, i.e., the expected frequency of input patterns, i.e., features, decreases approximately linearly with the size/complexity of the features. This reflects the strongly, hierarchical, recursive, compositional structure of the natural world, both in space and time, i.e., entities are made of parts, which are made of smaller scale parts, etc. The forces/relations that exist between parts at the same scale and across scales severely limits the configuration spaces of wholes (and intermediate compositions) and therefore the possible appearances of wholes. What this essentially means is that only a relatively tiny fraction of the space of all possible inputs can actually occur, but those that do occur tend to recur far more frequently than chance, i.e., a "heavy tail". If a relatively small set of input patterns occur far more frequently than chance, that implies that the rate at which new patterns occur must be much lower than chance. And, note that a novel input, X, is assigned a code only on its first occurrence: all subsequent occurrences of X simply reactivate its previously assigned code, i.e., X is recognized. Moreover, the sensitivity of a mac's match computation (of a new input to the set of previously stored inputs) can be parametrically varied. In general, there will be a hypersphere around X within which a novel input, X', will, with high probability, cause X's code to reactivate exactly. This acts to further reduce the effective rate at which new codes are assigned, i.e., the rate at which the mac approaches its storage capacity.

Sparsey has a further property that exploits the above "heavy tail" property, metaplasticity. Specifically, the ability of a synaptic weight to change decreases as a function of the timecourse of its hisory of increases, i.e., as a function of its history of pre-post coincidences. First, realize that Sparsey's rule for changing a synaptic weight is quite simple: whenever there is a pre-post coincidence, the weight is (re)set to the maximum possible weight, i.e., binary "1". It will then decay passively, initially slowly, but with increasing acceleration toward "0". However, if another pre-post occurrs within a window of the prior pre-post, the weight is set back to "1" and the passive decay rate decreases. In fact, after very few such (sufficiently close in time) pre-posts, the passive decay rate is set to zero, thus leaving the weight permanently at "1". The specific (parametrized) rule is described in detail in my 2014 paper. The ultimate effect of this metaplasticity rule is to strongly favor forming permanent memories of inputs, which occur more than once, even if their absolute rates of occurrence are quite low (i.e., the "long tail"), while allowing memories of inputs that occur only once to fade away.

Sparsey has one further principle that further reduces the rate at which a mac adds new codes. It is the principle that the number of time steps that a code stays active, i.e., its persistence, increases with level. In almost all studies to date, we have simply doubled persistence with level. This explicitly halves the rate at which new codes are assigned at each higher level and acts on top of the statistical principles described above.

While not a proof, the above principles collectively support the claim that the expected rate of occurrence of new inputs drops exponentially with level and therefore that we can expect hierarchies of macs to have long operational lifetimes in natural worlds without ever exhausting memory capacity of the macs at higher levels, and thus, without ever exhausting the capacity of whole hierarchies (whole models). Empirically verifying and quantifying this property is a high priority task for the near future. As part of this analysis I will also compute fidelity measures for each level. That is, in addition to the requirement that the overall model retains the ability to learn new permanent memory traces throughout its life, it must also be the case that the model builds an internal model that captures the domain's underlying statistics and thus does well at recognizing new instances and making predictions. Given that the algorithm that runs in each mac, for both learning and best-match retrieval, has a fixed number of operations for the life of the system, and that an instance of either learning or retrieval for the whole model involves a single iteration over the macs comprising the hierarchy, the time complexity of learning and best-match retrieval for the whole model is also fixed for the life of the system, and thus, effectively O(1).